Bayesian inference

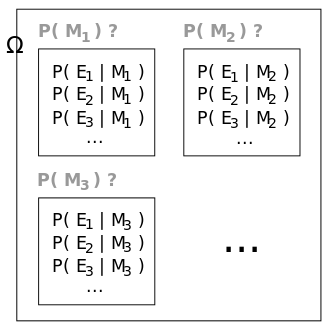

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics, that has applications in a wide range of disciplines, including engineering, biology, chemistry, and social sciences.

Overview[edit]

Bayesian inference derives its name from Thomas Bayes, who formulated a specific case of Bayes' theorem in his paper published posthumously in 1763. The general form of Bayes' theorem provides a way to update the probabilities of hypotheses based on observed evidence. In the context of Bayesian inference, the probability of a hypothesis before observing the evidence is known as the prior probability. The probability of observing the evidence given that the hypothesis is true is known as the likelihood. The updated probability of the hypothesis after observing the evidence is known as the posterior probability.

Mathematical Formulation[edit]

The mathematical formulation of Bayesian inference involves calculating the posterior probability according to Bayes' theorem. The theorem is expressed as:

\[ P(H|E) = \frac{P(E|H) \cdot P(H)}{P(E)} \]

where:

- \(P(H|E)\) is the posterior probability of the hypothesis \(H\) given the evidence \(E\),

- \(P(E|H)\) is the likelihood of observing evidence \(E\) given that hypothesis \(H\) is true,

- \(P(H)\) is the prior probability of hypothesis \(H\), and

- \(P(E)\) is the probability of observing the evidence.

Applications[edit]

Bayesian inference has a wide range of applications across various fields. In medicine, it is used for disease screening and for making decisions based on patient data. In machine learning, Bayesian methods are employed in the development of spam filters and in the creation of algorithms that can learn from data. In environmental science, Bayesian inference is used for modeling climate change and assessing the impact of human activities on the environment.

Advantages and Disadvantages[edit]

One of the main advantages of Bayesian inference is its flexibility in incorporating prior knowledge about a system or phenomenon. This can be particularly useful in situations where data is limited or expensive to obtain. However, a significant disadvantage is the subjective nature of choosing a prior, which can lead to different conclusions based on different priors.

Conclusion[edit]

Bayesian inference is a powerful and versatile method for statistical analysis that allows for the incorporation of prior knowledge and the updating of probabilities with new evidence. Its applications span a wide range of fields, demonstrating its utility in solving complex problems.

Ad. Transform your life with W8MD's Budget GLP-1 injections from $75

W8MD offers a medical weight loss program to lose weight in Philadelphia. Our physician-supervised medical weight loss provides:

- Weight loss injections in NYC (generic and brand names):

- Zepbound / Mounjaro, Wegovy / Ozempic, Saxenda

- Most insurances accepted or discounted self-pay rates. We will obtain insurance prior authorizations if needed.

- Generic GLP1 weight loss injections from $75 for the starting dose.

- Also offer prescription weight loss medications including Phentermine, Qsymia, Diethylpropion, Contrave etc.

NYC weight loss doctor appointmentsNYC weight loss doctor appointments

Start your NYC weight loss journey today at our NYC medical weight loss and Philadelphia medical weight loss clinics.

- Call 718-946-5500 to lose weight in NYC or for medical weight loss in Philadelphia 215-676-2334.

- Tags:NYC medical weight loss, Philadelphia lose weight Zepbound NYC, Budget GLP1 weight loss injections, Wegovy Philadelphia, Wegovy NYC, Philadelphia medical weight loss, Brookly weight loss and Wegovy NYC

![]() Facebook_Shiny_Icon YouTube_icon_(2011-2013)

Error creating thumbnail:

Facebook_Shiny_Icon YouTube_icon_(2011-2013)

Error creating thumbnail:

|

WikiMD's Wellness Encyclopedia |

| Let Food Be Thy Medicine Medicine Thy Food - Hippocrates |

Medical Disclaimer: WikiMD is not a substitute for professional medical advice. The information on WikiMD is provided as an information resource only, may be incorrect, outdated or misleading, and is not to be used or relied on for any diagnostic or treatment purposes. Please consult your health care provider before making any healthcare decisions or for guidance about a specific medical condition. WikiMD expressly disclaims responsibility, and shall have no liability, for any damages, loss, injury, or liability whatsoever suffered as a result of your reliance on the information contained in this site. By visiting this site you agree to the foregoing terms and conditions, which may from time to time be changed or supplemented by WikiMD. If you do not agree to the foregoing terms and conditions, you should not enter or use this site. See full disclaimer.

Credits:Most images are courtesy of Wikimedia commons, and templates, categories Wikipedia, licensed under CC BY SA or similar.

Translate this page: - East Asian

中文,

日本,

한국어,

South Asian

हिन्दी,

தமிழ்,

తెలుగు,

Urdu,

ಕನ್ನಡ,

Southeast Asian

Indonesian,

Vietnamese,

Thai,

မြန်မာဘာသာ,

বাংলা

European

español,

Deutsch,

français,

Greek,

português do Brasil,

polski,

română,

русский,

Nederlands,

norsk,

svenska,

suomi,

Italian

Middle Eastern & African

عربى,

Turkish,

Persian,

Hebrew,

Afrikaans,

isiZulu,

Kiswahili,

Other

Bulgarian,

Hungarian,

Czech,

Swedish,

മലയാളം,

मराठी,

ਪੰਜਾਬੀ,

ગુજરાતી,

Portuguese,

Ukrainian