Entropy

Entropy is a fundamental concept in the field of thermodynamics and statistical mechanics, with a broad range of applications in physics, chemistry, and information theory. It is often described as a measure of disorder or randomness in a system.

Definition[edit]

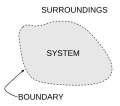

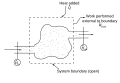

Entropy is defined in the context of a thermodynamic system as a measure of the number of specific ways in which a system may be arranged, often taken to be a measure of disorder. The concept of entropy was introduced by Rudolf Clausius who named it from the Greek word "transformation". He considered transfers of energy between bodies and observed there was a directional bias to the way energy moves.

Thermodynamics[edit]

In thermodynamics, entropy is a state function that is often interpreted as the degree of disorder or randomness in the system. The second law of thermodynamics states that the entropy of an isolated system always increases or remains constant. It never decreases.

Statistical Mechanics[edit]

In statistical mechanics, entropy is a measure of the number of ways that the particles in a system can be arranged to produce a specific macrostate, with each arrangement being a microstate. The entropy of a system is then defined as the natural logarithm of the number of microstates, multiplied by the Boltzmann constant.

Information Theory[edit]

In information theory, entropy is a measure of the uncertainty, or randomness, of a set of data. The concept of entropy in information theory is closely related to that in thermodynamics, but they are not identical.

See Also[edit]

References[edit]

<references />

Ad. Transform your life with W8MD's Budget GLP-1 injections from $75

W8MD offers a medical weight loss program to lose weight in Philadelphia. Our physician-supervised medical weight loss provides:

- Weight loss injections in NYC (generic and brand names):

- Zepbound / Mounjaro, Wegovy / Ozempic, Saxenda

- Most insurances accepted or discounted self-pay rates. We will obtain insurance prior authorizations if needed.

- Generic GLP1 weight loss injections from $75 for the starting dose.

- Also offer prescription weight loss medications including Phentermine, Qsymia, Diethylpropion, Contrave etc.

NYC weight loss doctor appointmentsNYC weight loss doctor appointments

Start your NYC weight loss journey today at our NYC medical weight loss and Philadelphia medical weight loss clinics.

- Call 718-946-5500 to lose weight in NYC or for medical weight loss in Philadelphia 215-676-2334.

- Tags:NYC medical weight loss, Philadelphia lose weight Zepbound NYC, Budget GLP1 weight loss injections, Wegovy Philadelphia, Wegovy NYC, Philadelphia medical weight loss, Brookly weight loss and Wegovy NYC

|

WikiMD's Wellness Encyclopedia |

| Let Food Be Thy Medicine Medicine Thy Food - Hippocrates |

Medical Disclaimer: WikiMD is not a substitute for professional medical advice. The information on WikiMD is provided as an information resource only, may be incorrect, outdated or misleading, and is not to be used or relied on for any diagnostic or treatment purposes. Please consult your health care provider before making any healthcare decisions or for guidance about a specific medical condition. WikiMD expressly disclaims responsibility, and shall have no liability, for any damages, loss, injury, or liability whatsoever suffered as a result of your reliance on the information contained in this site. By visiting this site you agree to the foregoing terms and conditions, which may from time to time be changed or supplemented by WikiMD. If you do not agree to the foregoing terms and conditions, you should not enter or use this site. See full disclaimer.

Credits:Most images are courtesy of Wikimedia commons, and templates, categories Wikipedia, licensed under CC BY SA or similar.

Translate this page: - East Asian

中文,

日本,

한국어,

South Asian

हिन्दी,

தமிழ்,

తెలుగు,

Urdu,

ಕನ್ನಡ,

Southeast Asian

Indonesian,

Vietnamese,

Thai,

မြန်မာဘာသာ,

বাংলা

European

español,

Deutsch,

français,

Greek,

português do Brasil,

polski,

română,

русский,

Nederlands,

norsk,

svenska,

suomi,

Italian

Middle Eastern & African

عربى,

Turkish,

Persian,

Hebrew,

Afrikaans,

isiZulu,

Kiswahili,

Other

Bulgarian,

Hungarian,

Czech,

Swedish,

മലയാളം,

मराठी,

ਪੰਜਾਬੀ,

ગુજરાતી,

Portuguese,

Ukrainian