Principal component analysis: Difference between revisions

CSV import Tags: mobile edit mobile web edit |

CSV import |

||

| Line 29: | Line 29: | ||

[[Category:Dimension reduction]] | [[Category:Dimension reduction]] | ||

{{Statistics-stub}} | {{Statistics-stub}} | ||

== Principal component analysis == | |||

<gallery> | |||

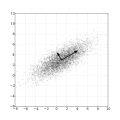

File:GaussianScatterPCA.svg|Gaussian Scatter PCA | |||

File:SCREE_plot.jpg|SCREE plot | |||

File:PCA_of_Haplogroup_J_using_37_STRs.png|PCA of Haplogroup J using 37 STRs | |||

File:PCA_versus_Factor_Analysis.jpg|PCA versus Factor Analysis | |||

File:Fractional_Residual_Variances_comparison,_PCA_and_NMF.pdf|Fractional Residual Variances comparison, PCA and NMF | |||

File:AirMerIconographyCorrelation.jpg|AirMer Iconography Correlation | |||

File:Elmap_breastcancer_wiki.png|Elmap breast cancer | |||

</gallery> | |||

Latest revision as of 20:57, 23 February 2025

Principal component analysis (PCA) is a statistical procedure that uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components. This transformation is defined in such a way that the first principal component has the largest possible variance (that is, accounts for as much of the variability in the data as possible), and each succeeding component in turn has the highest variance possible under the constraint that it is orthogonal to the preceding components. The resulting vectors are an uncorrelated orthogonal basis set. PCA is sensitive to the relative scaling of the original variables.

History[edit]

PCA was invented in 1901 by Karl Pearson, as an analogue of the principal axis theorem in mechanics; it was later independently developed and named by Harold Hotelling in the 1930s.

Definition[edit]

Given a set of observations (for example, a set of images), each one being a high-dimensional vector, PCA provides a way to reduce the dimensionality of the data, while retaining as much as possible of the variation present in the original data set.

Mathematical details[edit]

PCA is mathematically defined as an orthogonal linear transformation that transforms the data to a new coordinate system such that the greatest variance by some projection of the data comes to lie on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on.

Applications[edit]

PCA is used in exploratory data analysis and for making predictive models. It is commonly used in fields such as face recognition and image compression, and is a common technique for finding patterns in data of high dimension.

See also[edit]

References[edit]

<references />

This article is a statistics-related stub. You can help WikiMD by expanding it!

Principal component analysis[edit]

-

Gaussian Scatter PCA

-

SCREE plot

-

PCA of Haplogroup J using 37 STRs

-

PCA versus Factor Analysis

-

Fractional Residual Variances comparison, PCA and NMF

-

AirMer Iconography Correlation

-

Elmap breast cancer