Eigenvalues and eigenvectors: Difference between revisions

CSV import Tags: mobile edit mobile web edit |

CSV import |

||

| Line 31: | Line 31: | ||

{{math-stub}} | {{math-stub}} | ||

<gallery> | |||

File:Mona_Lisa_eigenvector_grid.png|Eigenvalues and eigenvectors | |||

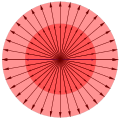

File:Eigenvectors_of_a_linear_operator.gif|Eigenvectors of a linear operator | |||

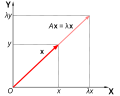

File:Eigenvalue_equation.svg|Eigenvalue equation | |||

File:Eigenvectors.gif|Eigenvectors | |||

File:Eigenvectors-extended.gif|Eigenvectors extended | |||

File:Homothety_in_two_dim.svg|Homothety in two dimensions | |||

File:Unequal_scaling.svg|Unequal scaling | |||

File:Rotation.png|Rotation | |||

File:Shear.svg|Shear | |||

File:Squeeze_r=1.5.svg|Squeeze with r=1.5 | |||

File:GaussianScatterPCA.png|Gaussian Scatter PCA | |||

File:Mode_Shape_of_a_Tuning_Fork_at_Eigenfrequency_440.09_Hz.gif|Mode Shape of a Tuning Fork at Eigenfrequency 440.09 Hz | |||

</gallery> | |||

Latest revision as of 11:46, 18 February 2025

Eigenvalues and eigenvectors are fundamental concepts in linear algebra that play a pivotal role in various areas of mathematics and its applications, including differential equations, quantum mechanics, systems theory, and statistics. They are particularly important in the analysis and solution of linear systems of equations.

Definition[edit]

Given a square matrix A, an eigenvector of A is a nonzero vector v such that multiplication by A alters only the scale of v: \[A\mathbf{v} = \lambda\mathbf{v}\] Here, \(\lambda\) is a scalar known as the eigenvalue associated with the eigenvector v.

Properties[edit]

- Characteristic Polynomial: The eigenvalues of a matrix A are the roots of the characteristic polynomial, which is defined as \(\det(A - \lambda I) = 0\), where I is the identity matrix of the same dimension as A.

- Multiplicity: An eigenvalue's multiplicity is the number of times it is a root of the characteristic polynomial. The geometric multiplicity of an eigenvalue is the number of linearly independent eigenvectors associated with it.

- Diagonalization: A matrix A is diagonalizable if there exists a diagonal matrix D and an invertible matrix P such that \(A = PDP^{-1}\). The diagonal entries of D are the eigenvalues of A, and the columns of P are the corresponding eigenvectors.

- Spectral Theorem: For symmetric matrices, the spectral theorem states that the matrix can be diagonalized by an orthogonal matrix, and its eigenvalues are real.

Applications[edit]

- In quantum mechanics, eigenvalues and eigenvectors are used to solve the Schrödinger equation, where the eigenvalues represent the energy levels of a quantum system.

- In vibration analysis, the eigenvalues determine the natural frequencies at which structures will resonate.

- Eigenvalues and eigenvectors are used in Principal Component Analysis (PCA) in statistics for dimensionality reduction and data analysis.

- In graph theory, the eigenvalues of the adjacency matrix of a graph are related to many properties of the graph, such as its connectivity and its number of walks.

Calculation[edit]

The calculation of eigenvalues and eigenvectors is a fundamental problem in numerical linear algebra. Various algorithms exist for their computation, especially for large matrices, including the QR algorithm, power iteration, and the Jacobi method for symmetric matrices.

See Also[edit]

This article is a mathematics-related stub. You can help WikiMD by expanding it!

-

Eigenvalues and eigenvectors

-

Eigenvectors of a linear operator

-

Eigenvalue equation

-

Eigenvectors

-

Eigenvectors extended

-

Homothety in two dimensions

-

Unequal scaling

-

Rotation

-

Shear

-

Squeeze with r=1.5

-

Gaussian Scatter PCA

-

Mode Shape of a Tuning Fork at Eigenfrequency 440.09 Hz