Unsupervised learning: Difference between revisions

From WikiMD's Wellness Encyclopedia

CSV import |

CSV import |

||

| Line 49: | Line 49: | ||

[[Category:Machine learning]] | [[Category:Machine learning]] | ||

<gallery> | |||

File:Task-guidance.png|Unsupervised learning | |||

File:Hopfield-net-vector.svg|Hopfield network vector | |||

File:Boltzmannexamplev1.png|Boltzmann example | |||

File:Restricted_Boltzmann_machine.svg|Restricted Boltzmann machine | |||

File:Stacked-boltzmann.png|Stacked Boltzmann | |||

File:Helmholtz_Machine.png|Helmholtz Machine | |||

File:Autoencoder_schema.png|Autoencoder schema | |||

File:VAE_blocks.png|Variational Autoencoder blocks | |||

</gallery> | |||

Latest revision as of 11:18, 18 February 2025

Type of machine learning algorithm

| Machine learning and data mining |

|---|

|

|

Unsupervised learning is a type of machine learning that involves training a model on data without explicit instructions on what to do with it. The model attempts to learn the underlying structure of the data by identifying patterns and relationships. Unlike supervised learning, where the model is trained on labeled data, unsupervised learning works with unlabeled data.

Overview[edit]

Unsupervised learning is used to draw inferences from datasets consisting of input data without labeled responses. The most common unsupervised learning tasks are clustering and association.

- Clustering: This involves grouping a set of objects in such a way that objects in the same group (or cluster) are more similar to each other than to those in other groups. K-means clustering and hierarchical clustering are popular clustering algorithms.

- Association: This involves discovering interesting relations between variables in large databases. A common example is market basket analysis, which is used to identify sets of products that frequently co-occur in transactions.

Techniques[edit]

Several techniques are used in unsupervised learning, including:

- Neural networks: These are computational models inspired by the human brain, consisting of interconnected groups of artificial neurons. They are used in various unsupervised learning tasks.

- Dimensionality reduction: Techniques such as Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are used to reduce the number of random variables under consideration.

- Anomaly detection: This involves identifying rare items, events, or observations that raise suspicions by differing significantly from the majority of the data.

Applications[edit]

Unsupervised learning is applied in various fields, including:

- Image recognition: Identifying patterns and features in images without prior labeling.

- Genomics: Analyzing genetic data to find patterns and relationships.

- Natural language processing: Understanding and processing human language data.

Related pages[edit]

Gallery[edit]

References[edit]

- Ian,

Deep Learning, MIT Press, 2016, ISBN 978-0262035613,

- LeCun, Yann,

Unsupervised Learning: Foundations of Neural Computation, MIT Press, 1998,

-

Unsupervised learning

-

Hopfield network vector

-

Boltzmann example

-

Restricted Boltzmann machine

-

Stacked Boltzmann

-

Helmholtz Machine

-

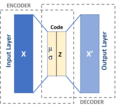

Autoencoder schema

-

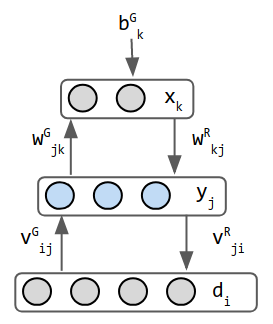

Variational Autoencoder blocks