Transfer learning: Difference between revisions

CSV import |

CSV import |

||

| Line 57: | Line 57: | ||

[[Category:Machine learning]] | [[Category:Machine learning]] | ||

[[Category:Artificial intelligence]] | [[Category:Artificial intelligence]] | ||

<gallery> | |||

File:Transfer_learning.svg|Transfer learning diagram | |||

File:Transfer_learning_and_domain_adaptation.png|Transfer learning and domain adaptation illustration | |||

</gallery> | |||

Latest revision as of 01:19, 18 February 2025

Transfer Learning[edit]

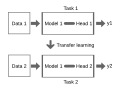

Transfer learning is a machine learning method where a model developed for a particular task is reused as the starting point for a model on a second task. It is a popular approach in deep learning where pre-trained models are used as the basis for new models.

Overview[edit]

Transfer learning is based on the idea that knowledge gained while solving one problem can be applied to a different but related problem. For example, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This approach is particularly useful when the second task has limited training data.

Types of Transfer Learning[edit]

There are several types of transfer learning, including:

- Inductive Transfer Learning: The source and target tasks are different, but related. The model is trained on the source task and then fine-tuned on the target task.

- Transductive Transfer Learning: The source and target tasks are the same, but the domains are different. The model is trained on the source domain and applied to the target domain.

- Unsupervised Transfer Learning: The model is trained on a source task without labels and then applied to a target task, which may or may not have labels.

Applications[edit]

Transfer learning is widely used in various applications, including:

- Image Classification: Pre-trained models on large datasets like ImageNet are fine-tuned for specific image classification tasks.

- Natural Language Processing (NLP): Models like BERT and GPT are pre-trained on large text corpora and fine-tuned for specific NLP tasks such as sentiment analysis or question answering.

- Speech Recognition: Transfer learning is used to adapt models to different languages or accents.

Challenges[edit]

While transfer learning offers many benefits, it also presents challenges such as:

- Negative Transfer: When the knowledge from the source task negatively impacts the performance on the target task.

- Domain Mismatch: Differences between the source and target domains can lead to poor performance.

- Data Scarcity: Limited data in the target domain can make it difficult to fine-tune the model effectively.

Related Concepts[edit]

Related Pages[edit]

References[edit]

<references group="" responsive="1"></references>

External Links[edit]

-

Transfer learning diagram

-

Transfer learning and domain adaptation illustration